UX Research • Mobile App • UI Design • Wearable Device

Cope : Trauma Management Ecosystem

Cope is a 10 week academic case study researching and designing a support system through a wearable AI device to help track and identify when, where, and why triggers induced by trauma occur; suggesting actions and coping methods through a paired mobile application.

Problem

Triggers induced by trauma are increasingly untreated due to obstructions like cost, time, guilt, and a lack of awareness. Consequently, these triggers develop into more severe chronic mental health problems.

Solution

Cope utilizes a wearable AI device to help track and identify when, where, and why triggers induced by trauma occur and will suggest actions and coping methods based on the trigger activity in the paired application through machine learning.

Smartwatch

Cope has an application that can also be installed on smartwatches if they already have a smartwatch, and do not want to purchase the Cope wearable.

‘Copilot’

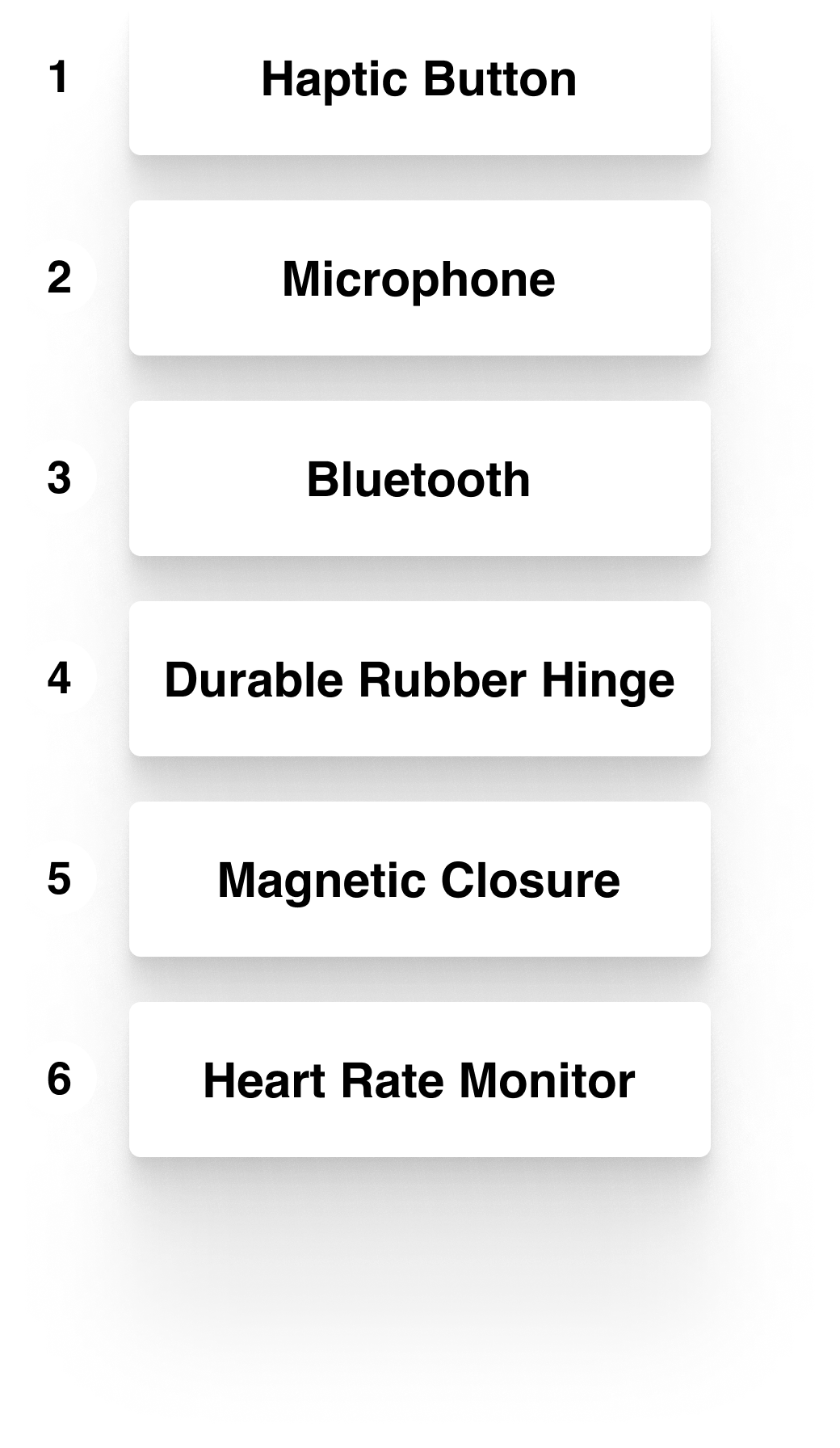

Cope’s low-cost and inconspicuous wearable named “Copilot” uses environmental sound recognition when pressed to document triggers.

Copilot

Copilot can be worn virtually anywhere on the user via its magnetic closure, as long as it makes contact with the skin.

The lightweight and discrete device transmits data via bluetooth, and can also be easily located through the app.

Features

The device uses inexpensive technology paired with machine learning to provide accurate and applicable suggestions for users to manage their responses to triggers.

Trends

Cope aggregates trends seen in the user’s trigger responses on the landing screen. These trends are visualized in bubbles that shrink and expand proportional to the frequency of impact. This fluctuation in size gently and non intrusively guides users to see what areas they can focus and improve upon.

Suggestions

Cope will suggest personalized actions based on the logged triggers input from Copilot or the smart watch companion, to help the user avoid negative stimulations.

These actions offer a guide for users to start managing their trauma and regain control.

Entries

When Copilot is pressed, heart rate, time, location, and sound playbacks from triggers are documented and organized by the trigger type: auditory, visual, and physical.

Users can also add additional data if desired.

Process

Research

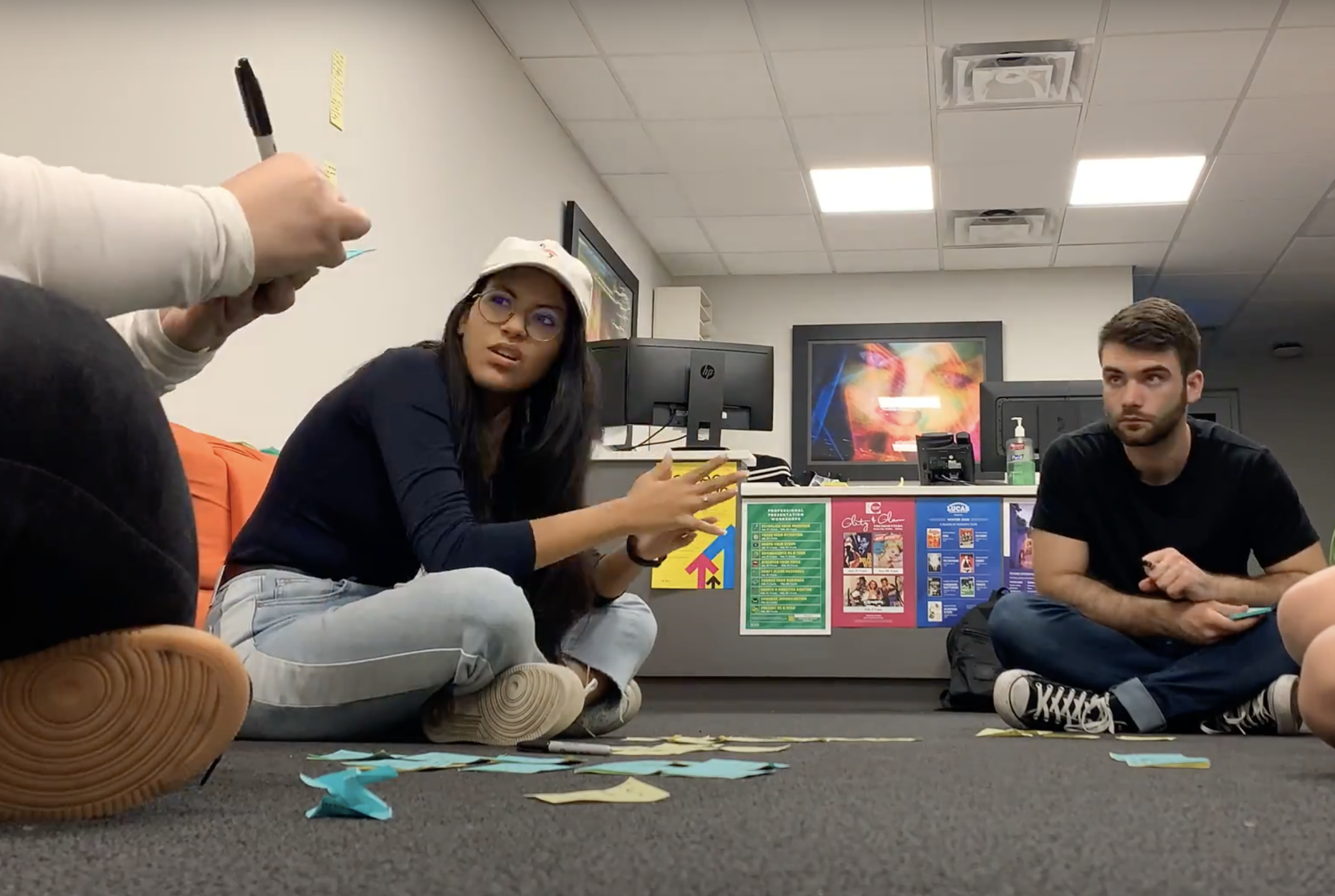

Affinity Mapping

Ideating

Product Sketches

Low-fi User Testing

Bubbles or No Bubbles?

Interactions

Audio Playback

Simplification

Mid-Fi User Testing

Physical Prototype

Physical Prototype